What Is a Google Crawl Budget and How to Optimize Them?

What Is a Google Crawl Budget and How To Do It?

You must be aware of Google Bot or the search engine crawler, which crawls through your website’s pages to understand and then index the content on each page. Google bots are ostensibly responsible for ranking your website’s pages in the search engine results page. If your website has thousands of web pages, the Google Bot will have difficulty crawling each page and thus choosing what, when and how crawl becomes crucial.

Table of Contents

What Is a Google Crawl Budget and How To Do It?

What is a Crawl Budget?

A Google Crawl Budget refers to the number of website pages on your website or the URL that can be potentially crawled from your website. A crawl budget is a derivative of two essential factors: Crawl Rate and Crawl Demand.

What is Crawl Rate Limit?

Crawl rate limit is set such that Googlebot doesn’t send too many requests to your website because it may lead to slowing down the speed of your website. The crawl rate depends on the following factors:

Speed:

The server response of your website can spike or drop the crawling rate of Google Bot. The slower the site, the lesser the web pages that will be crawled.

Search Console Crawl Rate Limit:

You may monitor the crawl rate by setting a higher limit, but that doesn’t guarantee a higher crawl rate.

What is Crawl Demand?

Google Bot will not crawl your web page in case the demand for it is low. Popular and regularly updated web pages have higher crawl demand. The reputation of the web page also plays an important role in the crawl demand for the website. Hence, it is ideal to maintain high quality and original content.

Read more: How To Do Competitor Research for Your Business?

Important Features of Crawl Budget:

- Google Crawl Budget will drop if there are low valued URLs on your webpage.

- For a good crawl budget, you must constantly update the content on your website. You must remove any duplicate content on the website and retain only high-quality content.

- The crawl budget for your website can be negatively impacted by the presence of Duplicate Title tags and Meta tags.

- The load speed of your website is a crucial factor for determining your crawl rate.

How to Check Crawl Budget?

You can get to know the speed of your website by using the Google search console.

How Crawl Budget Affects Your Website Rank?

It is important for the Google Bots to crawl your website for better ranks in SERPs. However, a higher Google Crawl budget does not guarantee higher ranks. You may take professional assistance from a reputed digital marketing company in Chennai. On the contrary, having a low crawl rate can significantly lower your website’s rank.

Steps to Optimize Google Crawl Budget

Google Crawl budget is different from search engine optimization in the respect that SEO emphasizes the user experience and crawl budget is about whether your website can easily be crawled or not. Here are a few techniques to optimize your Google Crawl budget:

Clean Up:

XML Sitemaps make your website more organized and structured for users and crawlers alike. You should regularly update the sitemap of your website and avoid any cluttering. There are many tools available like Website Auditor that can generate a sitemap for a clean website.

Read more: Optimize Your Visibility Through Guest Posting Services

It is not uncommon to see e-commerce websites being plagued with problems of duplication. For instance, a product that belongs to more than one category will be available on each of the category paths as shown below-

www.domain.com/electronics/bluetooth-head-set /product-name

www.domain.com/Home-appliance/bluetooth-head-set /product-name

This can be overcome by using the canonical or no-index tags.

Crawlable Pages:

The crawlers can index your pages if they are able to follow the links on your website. Hence, it is critical to configure .htaccess and robots.txt to prevent the blocking of critical pages on the website. You must also give a text version of the heavy media files on your webpage. If you wish to block a page from being indexed by Google, you can manually do so by:

Noindex meta tag – noindex”/> – This is to be placed in the <head> section of your page.

X-Robots-Tag –This is to be placed in the HTTP header

Limit Usage of Heavy Files and Redirect Chains:

These are the flash, Silverlight and JavaScript content on your website. Most search engines cannot read these files and this can affect the indexing of your webpage.

Learn more: How Does Social Media Affect Web Designing

Ideally, the redirect chains on your website should be very few. The larger the redirected chains on your website, the more are the crawl budget drops. Also, remove any broken or incorrect redirects. Many links return the 404 error, which must be removed immediately to prevent them from being indexed. You can get a list of 404 error links on your website by using the Google search console; simultaneously, redirect those URLs to a new page.

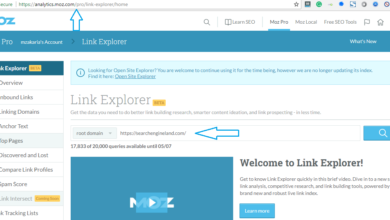

Know more: Know About Moz’s Link Explorer and Recent Updates

Conclusion:

In a nutshell, a clean website with a proper XML sitemap, few redirects, and no broken links are a few ways to optimize the crawl budget.

Comments

0 comments